R&D

As Innovation Director I spend a lot of time looking into emerging technologies. This is not just done by (re)searching, but getting hands on; making prototypes with a big group of amazingly talented people.

Below are some of the R&D projects I had a part in.

Metaverse demo - Roblox

The metaverse may be on everyone's minds, but it's not the easiest to envision. So the Labs.Monks made their own bite-sized version of the metaverse in Roblox. This demo follows a day in the life in the metaverse. Using Roblox Studio, we created three distinct worlds, each of which showcase different operations in the metaverse: a hub for personal info storage (like currencies and digital assets); a social environment to share and connect with others in real time; and a shopping district to browse products and services in one place (NFTs, food ordering and try-ons with Snapchat).

C.A.S.E VoiceBot

CASE (Conversational Automobile Selector Experiment) is a voice based prototype that provides the car discovery dealership experience from the comfort of your own home without needing to deal with industry lingo. Combining voice, on-screen prompts and an open-ended conversational approach - CASE identifies vehicles that are best suited for you based on your lifestyle.

CASE uses Alexa Conversations, Amazon's new deep learning based approach for dialog management (currently in Beta as of August '21). This enables human-like conversations as well as the use of external APIs to run recommendation algorithms based on user's responses.

We also integrated Alexa's Presentation Language to provide visual context to the voice conversation, alongside an extensive database of cars to provide thousands of car options for users.

Machine Learning + video conference gaming

The WFH trials of 2020 called for a special kind of entertainment—one that got people off the couch and away from their desk. Our Labs team used machine learning to create a video conferencing game that gets everyone moving. Using TensorFlow’s object-recognition model, we trained the game to recognise over 50 common household items. The game then randomly picks an object and challenges players to find the item and hold it in front of their camera to score a point. Fun guaranteed; stable internet connection not included.

Visual Voice Bot - Alice

After Alan Turing demonstrated the power of computers to the world during WWII, he proposed a hypothetical test to determine their ability to exhibit human-like intelligence. We put a twist on the classic Turing test to see if AI can not only act human but also gauge our emotions. Using Soul Machines, we created Alice: a virtual human who analyses body language, facial expressions and gives emotional reactions to our answers. She’s tasked with finding out whether you’re a friend or foe of AI by analysing how you respond to a series of questions. Can you hide your true emotions?

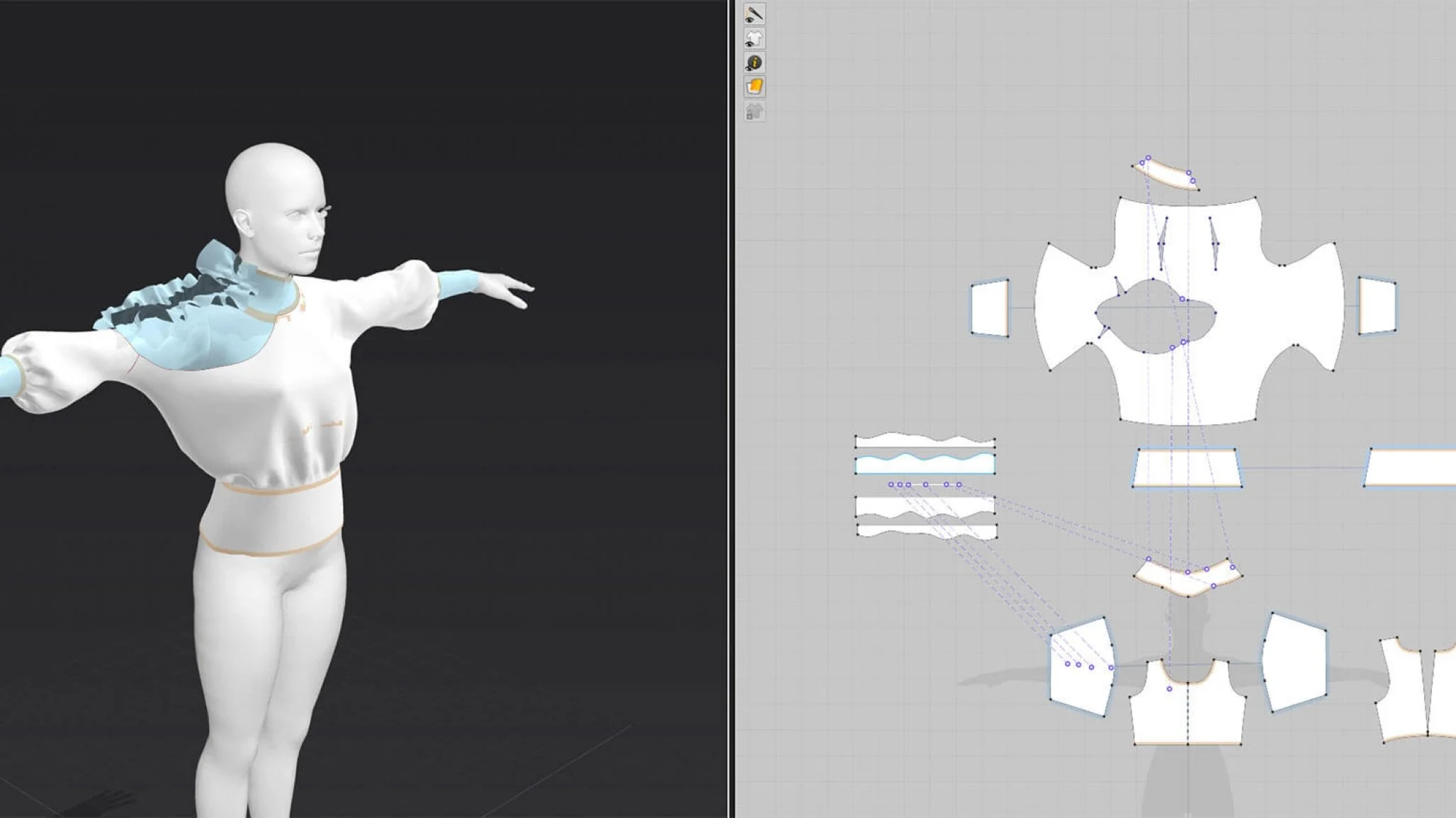

Virtual Garment Lens

Trying out garments using AR is becoming evermore popular in fashion. Testing Snapchat’s 3D body-tracking capabilities, we created a Lens that allows you to virtually try on garments that follow your body movements. The Lens matches the skeleton of the garment to your body so that it follows your shape and posture. When moving, the virtual fabric shows where the garment would wrinkle and fold. By turning Snapchat into a virtual wardrobe, brands can augment the way they showcase their designs by adding graphical elements such as text overlays. Want to virtually dress to impress? Give the Lens a try!

Virtual esports Stadium

To explore what the future might bring for experiencing esports as a fan, we prototyped a virtual stadium inside Hubs. Fans can visit the stadium in VR or their browser to experience esports in a more immersive way. The prototype lets fans watch PlayerUnkown’s Battleground matches on a big virtual screen as well through a 3D map that shows the live location of players through the game’s API. By letting fans experience the action from new perspectives while interacting with other spectators, virtual stadiums might represent the next stage in esports.

Image Recognition

comparison tool

Shining light onto the black box of image-classification tools that use computer vision to identify objects, we developed a meta-tool that pits the most popular platforms against each other. Our web-based tool lets anyone perform a side-by-side comparison of Amazon, Baidu, Face++ and Google’s computer vision capabilities. It reveals how different platforms classify objects in the same image by listing their top labels along with the respective confidence scores. By showing that Amazon is most capable at classifying products, and that Google gives more contextually specific labels, the tool helps us identify the best platform for our clients and projects.

Web based computer vision

Person Blocker

Using the machine learning model BodyPix, we developed a ‘person blocker’ that can segment people out of a frame in real-time — all within your browser. The web-app is inspired by the ‘Z-Eyes’ from Black Mirror that let people block others out of their vision. By identifying up to 24 body parts, our application can separate a person from their environment and replace these pixels for something else. The application can be used for censoring people, but the underlying technology can also be used for advertising by replacing an array of moving objects with branded content and vice versa.

AR markerless object tracking

Polestar

The Polestar 1 Experience is a high-end car demonstration that lets car-show visitors explore the new Volvo Polestar through augmented reality. The AR application lets people explore the Polestar through an iPad Pro by adding a 3D model on top of the physical model. The virtual layer lets visitors see the new car features in action — including the new carbon-fiber body and drive system. We produced both the app and the 3D-animated car model. The Polestar 1 Experience was originally developed for the Geneva Motor Show but is now touring car shows around the world.